ARS-121GL-NB2B-LCC

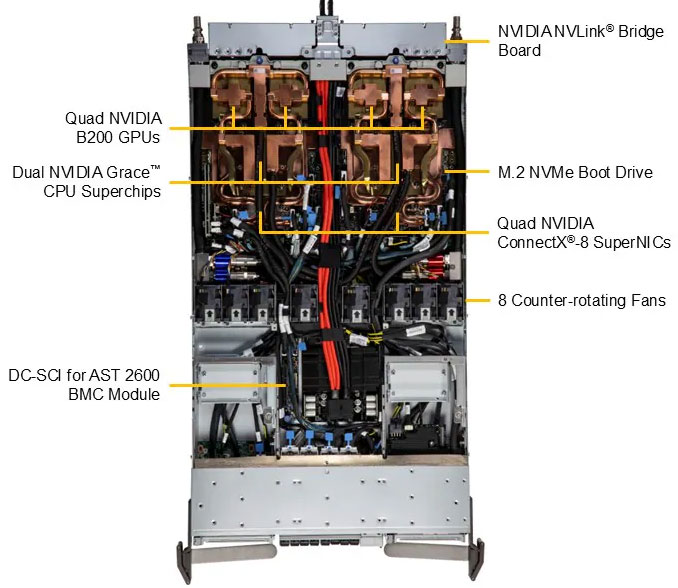

1U GPU server support Dual Nvidia 72-core Grace CPUs with liquid cooling

- NVIDIA GB200 with 4 NVIDIA NVLink® (GB200 NVL4)

- Dual Nvidia 72-core Grace CPUs with liquid cooling

- Quad NVIDIA B200 GPUs with liquid cooling

- 960GB Onboard Memory

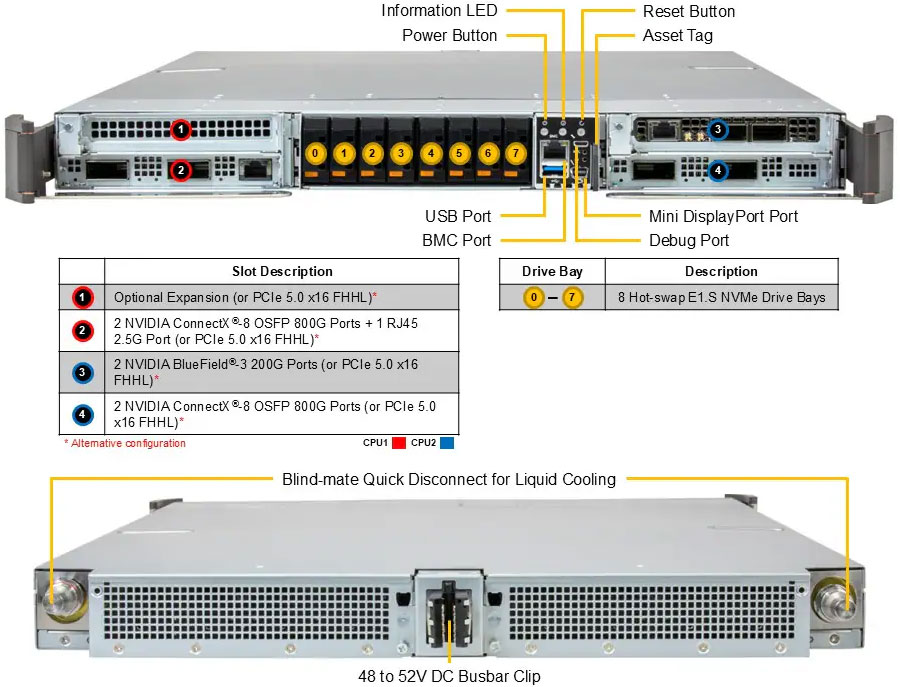

- Quad 800G ConnectX®-8 + 2 PCIe 5.0 x16 slots OR 4 PCIe 5.0 x16 slots

- 4 to 8 front hot-swap E1.S NVMe drive bays

- 48-52V DC Busbar

Key Applications

- High Performance Computing

- AI/Deep Learning Training

- Large Language Model (LLM) and Generative AI

Datasheet

| Product SKU | ARS-121GL-NB2B-LCC |

| Motherboard | Super GPU-NVGB200-NVL4 |

| Processor | |

| CPU | Dual processor(s) NVIDIA Dual 72-core CPUs on a Grace™ CPU Superchip |

| Note | Supports up to 300W TDP CPUs (Liquid Cooled) |

| GPU | |

| Max GPU Count | 4 onboard GPUs |

| CPU-GPU Interconnect | NVLink®-C2C |

| GPU-GPU Interconnect | Fifth-Generation NVIDIA NVLink™ |

| System Memory | |

| Memory | Slot Count: Onboard Memory Max Memory: Up to 960GB ECC LPDDR5X |

| On-Board Devices | |

| Chipset | System on Chip |

| Network Connectivity | 1 RJ45 2.5GbE with Intel® I210-AT (optional) 4 OSFP 800Gb InfiniBand with NVIDIA ConnectX®-8 SuperNIC (optional) 2 QSFP-DD 200Gb InfiniBand with NVIDIA® BlueField®-3 (optional) |

| Input / Output | |

| LAN | 1 RJ45 1 GbE Dedicated BMC LAN port (ASPEED AST2600) |

| USB | 1 Type-A port(Front) |

| Video | 1 Mini-DP port(Front) |

| TPM | 1 TPM Onboard TPM & TPM Header |

| System BIOS | |

| BIOS Type | AMI 64MB SPI Flash EEPROM |

| Management | |

| Software | SuperCloud Composer® Supermicro Server Manager (SSM) Super Diagnostics Offline (SDO) Supermicro Thin-Agent Service (TAS) SuperServer Automation Assistant (SAA) New! |

| Power configurations | Power-on mode for AC power recovery ACPI Power Management |

| Security | |

| Hardware | Trusted Platform Module (TPM) 2.0 |

| Features | Secure Firmware Updates |

| PC Health Monitoring | |

| CPU | Monitors for CPU Cores, Chipset Voltages, Memory |

| FAN | Fans with tachometer monitoring Status monitor for speed control Pulse Width Modulated (PWM) fan connectors |

| Temperature | Monitoring for CPU and chassis environment Thermal Control for fan connectors |

| Chassis | |

| Form Factor | 1U Rackmount |

| Model | CSE-MG104TS-V01DP-NVL4 |

| Dimensions and Weight | |

| Height | 1.7" (43.6 mm) |

| Width | 17.26" (438.4 mm) |

| Depth | 30.15" (766 mm) |

| Package | 9.05" (H) x 24.8" (W) x 45.27" (D) |

| Weight | Gross Weight: 79.36 lbs (36 kg) Net Weight: 70.5 lbs (32 kg) |

| Available Color | Silver |

| Expansion Slots | |

| PCI-Express (PCIe) Configuration | Default 2 PCIe 5.0 x16 (in x16) FHFL slots Option A 4 PCIe 5.0 x16 (in x16) FHFL slots |

| Drive Bays / Storage | |

| Drive Bays Configuration | Default: Total 8 bays 8 front hot-swap E1.S PCIe 5.0 x4 NVMe drive bays Option A: Total 4 bays 4 front hot-swap E1.S PCIe 5.0 x4 NVMe drive bays |

| M.2 | 1 M.2 PCIe 5.0 x4 NVMe slot (M-key 22110(default)) |

| System Cooling | |

| Fans | 8x 4cm heavy duty fans with optimal fan speed control |

| Liquid Cooling | Direct to Chip (D2C) Cold Plate |

| Power Supply | Power via Busbar (48-54V DC) |

| Operating Environment | |

| ROHS | RoHS Compliant |

| Environmental Spec. | Operating Temperature: 10°C to 35°C (50°F to 95°F) Non-operating Temperature: -30°C to 60°C (-40°F to 140°F) Operating Relative Humidity: 8% to 80% (max 21° DP; non-condensing) Non-operating Relative Humidity: 8% to 90% (max 38° DP; non-condensing) |

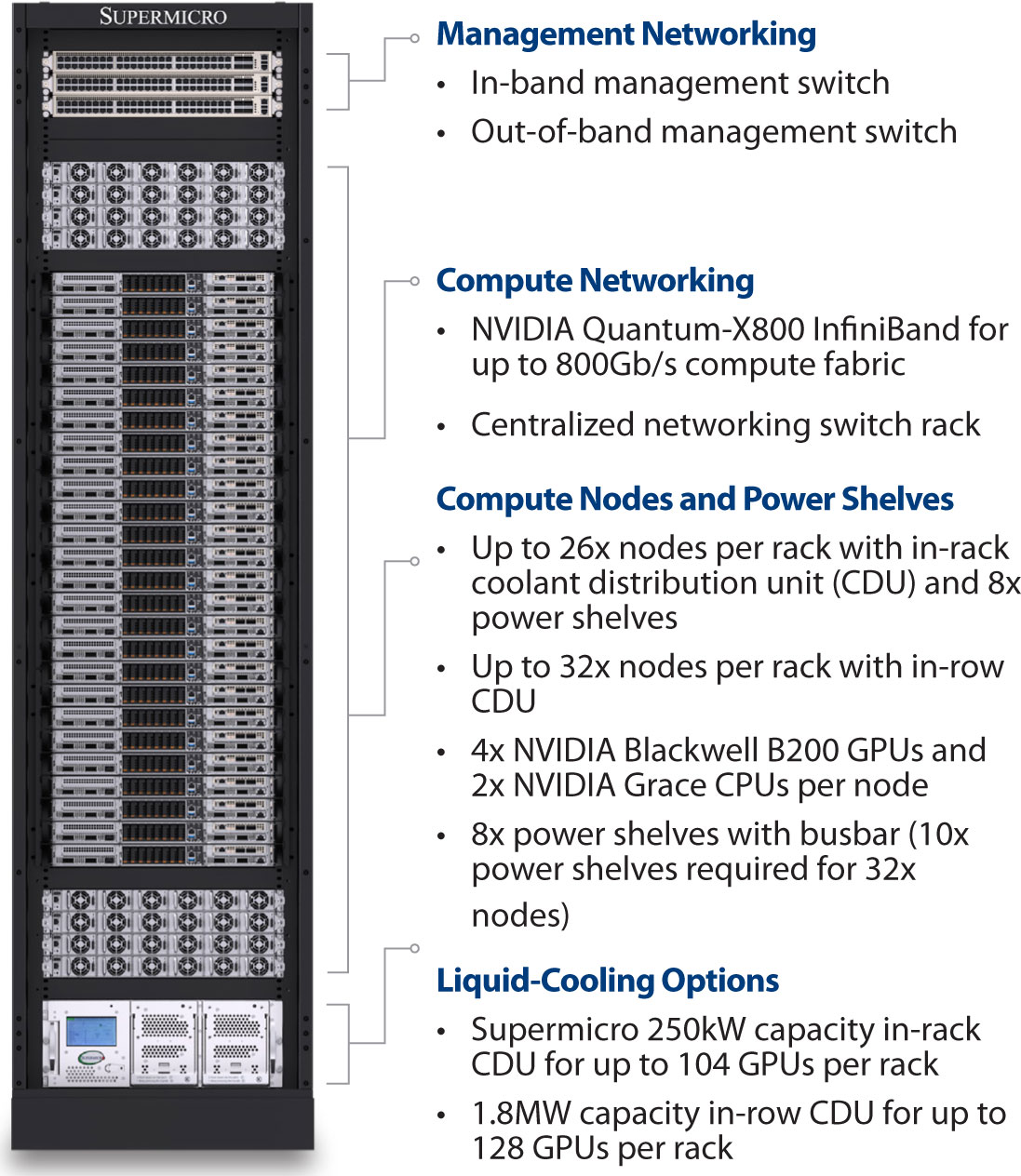

NVIDIA GB200 NVL4: Designed for Accelerated HP

Supermicro’s NVIDIA GB200 NVL4 rack-scale platform delivers exceptional performance for GPU-accelerated HPC and AI science workloads including molecular simulation, weather modeling, fluid dynamics, and genomics. Each node unifies four NVLink-connected NVIDIA Blackwell B200 GPUs with two NVIDIA Grace CPUs over NVLink-C2C, featuring direct-to chip liquid cooling and four ports of 800G NVIDIA Quantum InfiniBand networking with 800G dedicated to each GPU.

Scale up to 32 nodes per rack for 128 GPUs in a 48U NVIDIA MGX rack with busbar power distribution and flexible in-rack or in-row CDU options—delivering unmatched rack density for scientific computing and advanced AI research applications.

NVIDIA GB200 NVL4 : ARS-121GL-NB2B-LCC

| Overview | 1U Liquid-cooled System with 2x NVIDIA GB200 Grace Blackwell Superchips |

|---|---|

| CPU | 2 72-core NVIDIA Grace Arm Neoverse V2 CPUs 4 NVIDIA Blackwell Tensor Core GPUs |

| CPU Memory | 744GB HBM3e |

| GPU Memory | 960GB LPDDR5X |

| NVLink | 5th Generation NVIDIA NVLink at 600GB/s |

| Networking | 2 dual-port NVIDIA ConnectX®-8 SuperNICs (front) 1 dual-port NVIDIA BlueField®-3 DPUs (front) |

| Storage | Up to 8 E1.S PCIe 5.0 drives |

| Power Supply | Shared power through 6+2 rack power shelves |